How convincing they are ..

8 June 2025

Kitt

All photos here are AI-generated.

I use Arch btw ..

13 April 2025

Kitt

วันสงกรานต์ พ.ศ. 2568

วันสงกรานต์ปี 2568 เป็นปี จ.ศ. (2568 – 1181) = 1387 วันเถลิงศก ตรงกับ(1387 * 0.2587)+ floor(1387 / 100 + 0.38)– floor(1387 / 4 + 0.5)– floor(1387 / 400 + 0.595)– 5.53375= 358.88625 + 14 – 347 – 4 – 5.53375= 16.3525= วันที่ 16 เมษายน 2568 เวลา 08:27:36 วันสงกรานต์ ตรงกับ16.3525 – 2.165 = 14.1875= วันที่ 14 เมษายน 2568 … Continue reading วันสงกรานต์ พ.ศ. 2568

6 April 2025

Thep

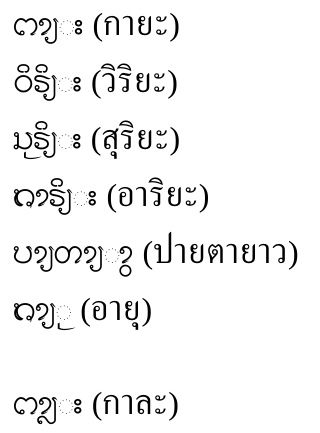

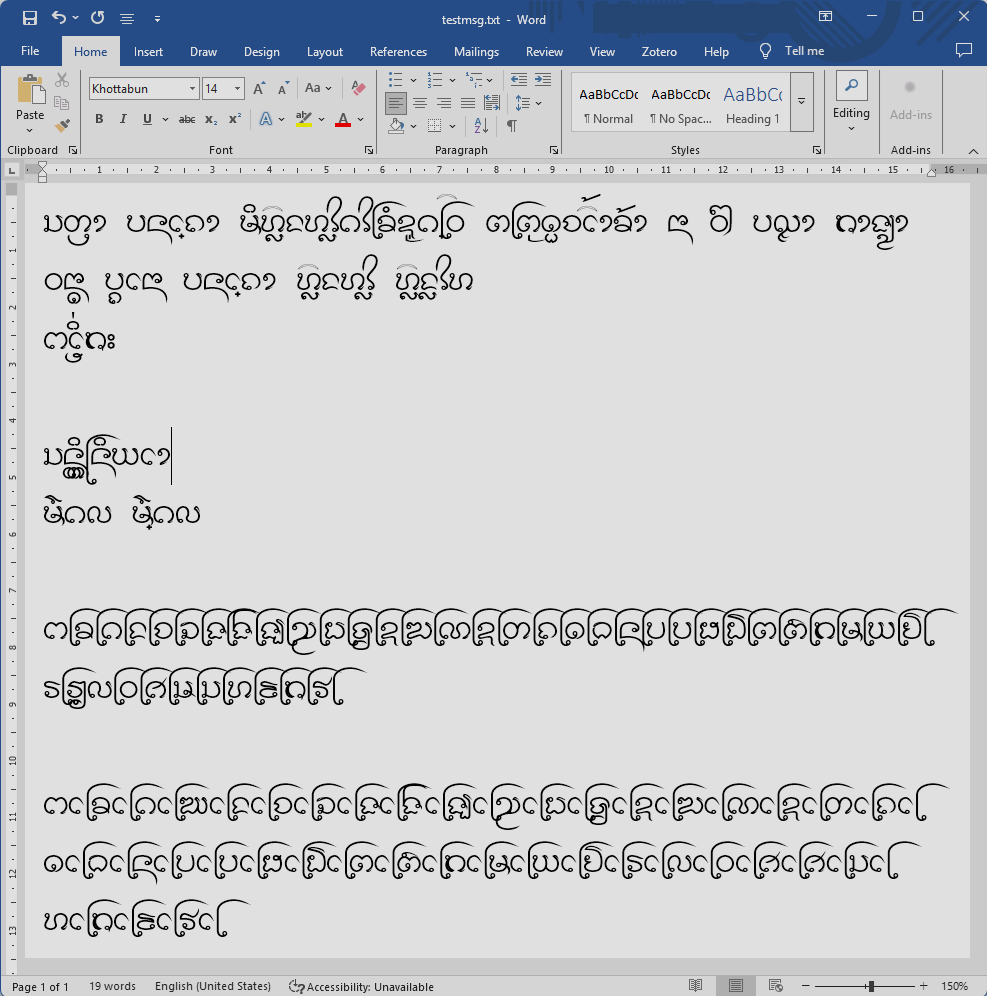

To USE or Not to USE for Lao Tham

จาก blog ที่แล้ว ผมได้เล่าถึงการรองรับอักษรธรรมในปัจจุบันที่ text shaping engine ต่างๆ หันมาใช้ Universal Shaping Engine (USE) ตามข้อกำหนดของไมโครซอฟท์ โดย USE เองเป็น engine ครอบจักรวาลที่มีการจัดการภายในตามคุณสมบัติของอักขระ Unicode เช่น การสลับสระหน้ากับพยัญชนะต้นสำหรับอักษรตระกูลพราหมี และเรียกใช้ OpenType feature ต่างๆ ในฟอนต์ตามลำดับที่กำหนดไว้

และการจัดการภายในของ USE ก็ทำให้มีข้อเสนอที่จะปรับโครงสร้างการลงรหัสข้อความอักษรธรรมเพื่อให้ทำงานกับ USE ได้ แต่มันก็ยังไม่เข้าที่เข้าทางนัก จึงเกิดแนวคิดที่จะหลบเลี่ยง USE แล้วทำทุกอย่างเองในฟอนต์ แต่ไปติดปัญหาที่ MS Word ที่ไม่ยอมให้หลบได้ง่ายๆ ทำให้เราอยู่บนทางแยกที่ต้องเลือกว่าจะใช้ USE ที่ยังไม่เข้าที่ หรือจะเลี่ยง USE ไปทำทุกอย่างเองโดยทิ้ง MS Word ไว้ข้างหลัง

blog นี้ก็จะวิเคราะห์ต่อ ว่าทางเลือกแต่ละทางมีข้อดีข้อเสียอย่างไร

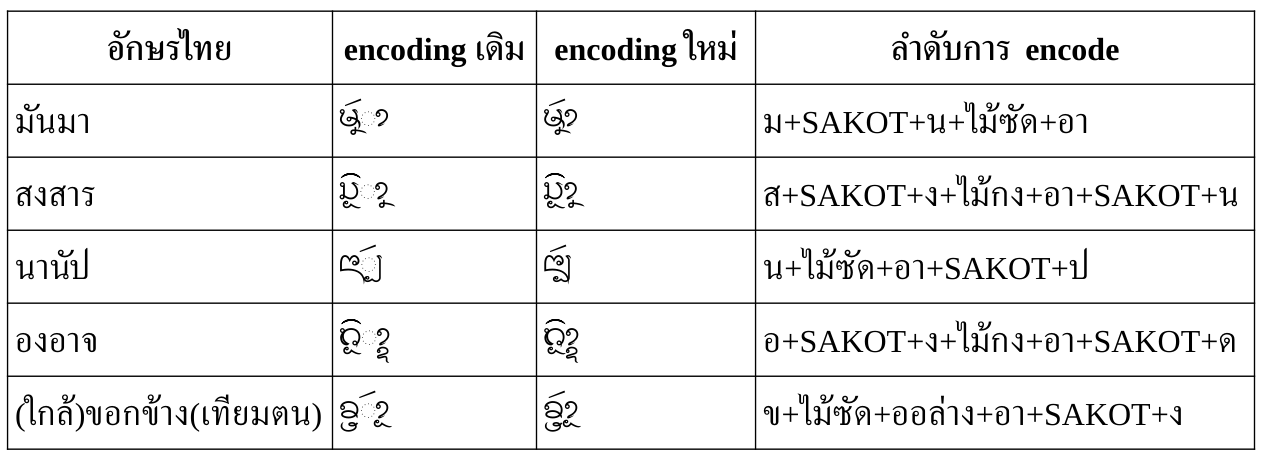

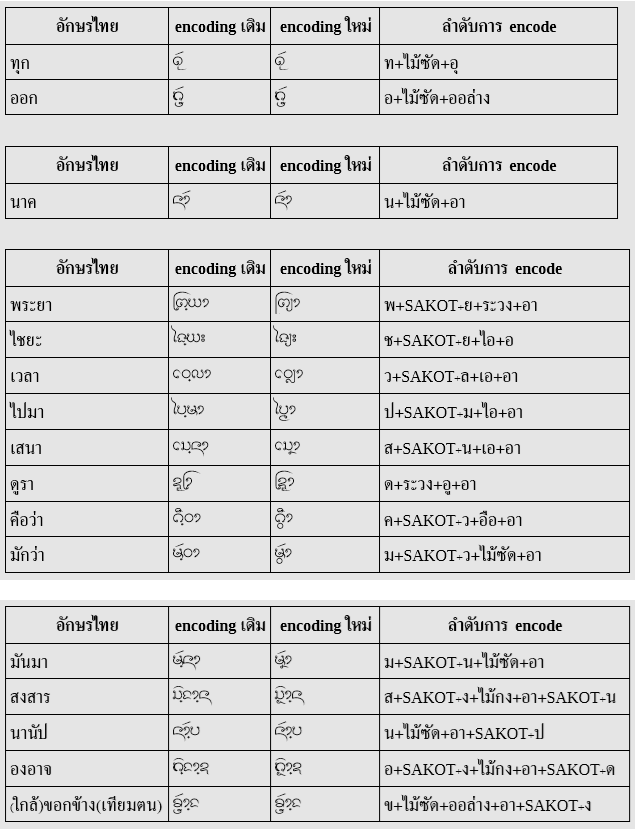

Encoding แบบใหม่

ตามข้อเสนอในเอกสาร Structuring Tai Tham Unicode เมื่อตัดรายละเอียดที่อักษรธรรมลาว/อีสานไม่ใช้ออกไป ก็พอจะสรุปลำดับอักขระในข้อความได้เป็น:

S ::= CC (Vs | Vf)?

โดยที่ CC คือ consonant cluster พร้อมสระหน้า/ล่าง/บน วรรณยุกต์ สระหรือตัวสะกดปิดท้าย

CC ::= C M? V? T? F? C ::= [<ก>-<ฮ><อิลอย>-<โอลอย><แล><ส_สองห้อง><ตัวเลข>] | <ห><SAKOT>[<ก>-<ม>] M ::= <ระวง>? <ล_ล่าง>? (<SAKOT><ว>)? (<SAKOT><ย>)? V ::= Vp? Vb? Va? Vp ::= [<เอ>-<ไม้ม้วน>] // สระหน้า Vb ::= [<อุ>-<อู><ออ_ล่าง>] // สระล่าง Va ::= [<อิ>-<อือ><ไม้กง><ไม้เก๋าห่อนึ่ง>][<ไม้ซัด><ไม้กั๋ง>]? // สระบน T ::= [<ไม้เอก>-<ไม้โท>] F ::= Fs? [<ละทั้งหลาย><ไม้กั๋งไหล><ง_บน><ม_ล่าง><บ_ล่าง><ส_ล่าง><ไม้กั๋ง>]? (<SAKOT> S)? Fs ::= [<อ><ส_สองห้อง><ออย>] | <SAKOT>[<ก>-<ฬ>] // อ ของสระเอือ หรือ ตัวสะกด

สังเกตว่ามี recursion ใน F เพื่อแทนลูกโซ่ของพยางค์ในคำบาลีด้วย

ต่อจาก CC ก็จะตามด้วยสระกินที่ (spacing vowels) ซึ่งแบ่งเป็นสระมีตัวสะกด (Vs) และสระไม่มีตัวสะกด (Vf)

สำหรับสระมีตัวสะกด Vs คือสระอาหรือสระอำ (สระอำในอักษรธรรมไม่ได้มีรหัสอักขระเฉพาะเหมือนอักษรไทย แต่แทนด้วยสระอาตามด้วยไม้กั๋ง (นิคหิต) ความจริงอาจนับไม้กั๋งเป็นตัวสะกดในสระอำก็ได้ แต่กฎนี้พยายามจัดการสระอากับสระอำไปด้วยกัน กลายเป็นว่าสระอำก็ยังมีตัวสะกดได้อีก) พร้อมตัวสะกด Fs (ถ้ามี)

Vs ::= AV Fs? AV ::= [<อา><อาสูง>] <ไม้กั๋ง>? // สระอา สระอำ

ส่วนสระไม่มีตัวสะกด Vf ในตัวเนื้อหาของร่างฯ ได้บรรยายถึงสระเอาะและสระเอือะ แต่ในสรุปส่วนท้ายดูจะลืมสระเอาะไป และยังจัดการ อ ของสระเอือะซ้ำซ้อนกับใน Fs อีก

Vf ::= <อ>? <อะ> // วิสรรชนีย์ของสระเอือะ

Encoding แบบ USE

แม้ encoding แบบใหม่ที่เสนอมีจุดมุ่งหมายเพื่อให้วาดแสดงด้วย USE แต่เมื่อลองใช้กับ USE จริงกลับยังมี dotted circle เกิดขึ้นในหลายกรณี ซึ่งจากการทดลองก็พอจะสังเกตลักษณะของ USE ที่ต่างจากโครงสร้างที่เสนอในร่างดังนี้:

- ลำดับสระใน

Vสระบนมาก่อนสระล่าง ไม่ใช่ตามหลังสระล่างอย่างในร่างฯ ดังนั้นจึงอาจปรับกฎเป็น:V ::= Vp? Va? Vb?

- วรรณยุกต์อยู่หน้าสระกินที่ไม่ได้ แต่ตามหลังได้ และต้องอยู่ก่อนตัวสะกด ดูเหมือน USE จะนับ

Vsเป็นส่วนหนึ่งของV:V ::= Vp? Va? Vb? Vs?

ซึ่งถ้าเป็นเช่นนั้น ก็หมายความว่า USE ก็ยังต้องการการ reorder ให้วรรณยุกต์ไปอยู่ในตำแหน่งก่อนหน้าสระกินที่เพื่อให้สามารถซ้อนบนพยัญชนะได้ด้วย มันจะกลายเป็นความซับซ้อนเกินจำเป็นน่ะสิ

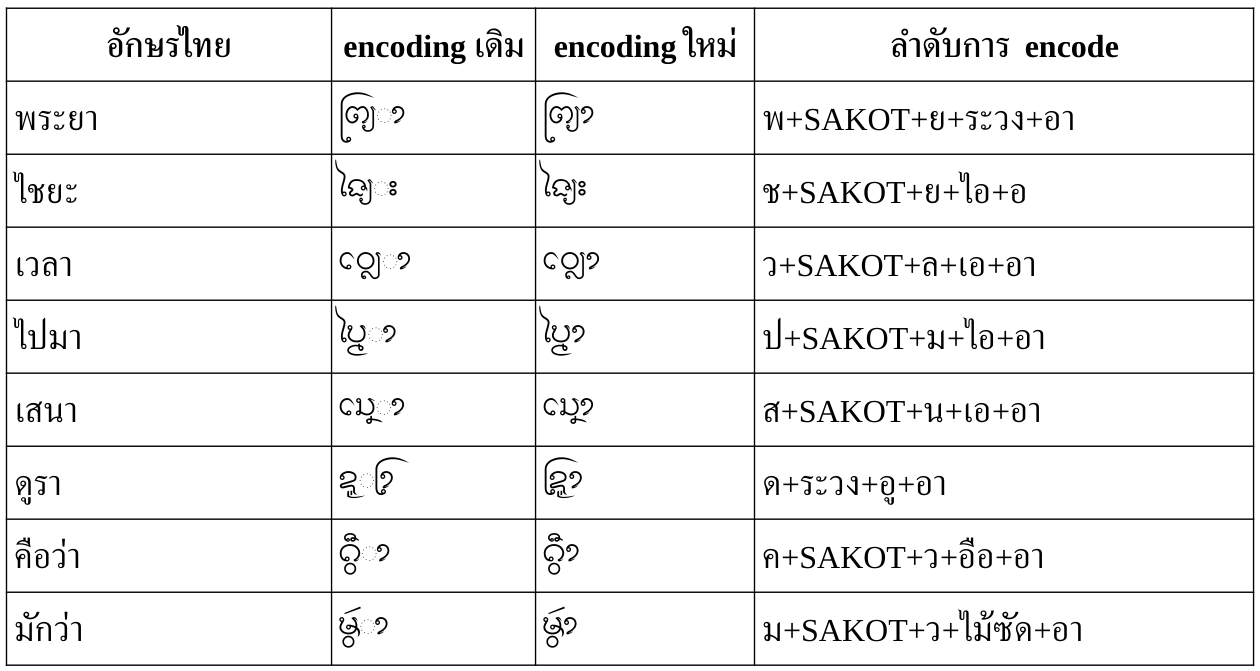

หลักการสำหรับการป้อนข้อความ

สมมติว่าเราใช้ encoding แบบ USE เราจะมีหลักในใจอย่างไร? เราจะใช้ลำดับการพิมพ์แบบที่เราเคยใช้กับอักษรไทยไม่ได้อีกแล้ว เพราะมันคือการ encode แบบกึ่ง visual ไม่ว่าเขาจะยืนยันที่จะเรียกว่า logical order

ยังไงก็ตาม แต่ลำดับการ encode นี้จะไม่ตรงกับลำดับการสะกดคำเสมอไป มันเน้นให้เรียงพิมพ์ได้เป็นหลัก! โดยมีลำดับแบบที่เรียกว่า logical order

มาหลอกให้งงเล่น

หลักการคือ

- ละทิ้งลำดับการสะกดในใจไว้ก่อน พิจารณารูปร่างของข้อความที่ต้องการ แล้ว encode ตามกฎในข้อถัดๆ ไป

- ผสมตัวห้อย ตัวเฟื้อง หรือระวง กับพยัญชนะต้นก่อน โดยมากเป็นตัวควบกล้ำ

- ตามด้วยสระ โดยถ้ามีสระหลายตัว ให้วางสระตามลำดับ หน้า, บน, ล่าง, ขวา

- ตามด้วยวรรณยุกต์

- ตามด้วยตัวสะกด ซึ่งอาจเป็นตัวห้อย, ตัวเฟื้อง, ไม้กั๋ง, ง สะกดบน, หรือไม้กั๋งไหล

- ถ้ามีการเชื่อมพยางค์เป็นลูกโซ่แบบบาลี ก็นับตัวสะกดเป็นพยัญชนะต้นของพยางค์ถัดไปแล้วใส่สระตามได้เลย

ความไม่ปกติของลำดับ

หลักการที่ว่าไปก็ดูปกติดีนี่? แต่ความซับซ้อนของอักษรธรรมทำให้มันไม่ตรงไปตรงมาอย่างนั้น ต่อไปนี้คือกรณีต่างๆ ที่ฝืนความรู้สึก อย่างน้อยก็ในระยะแรก

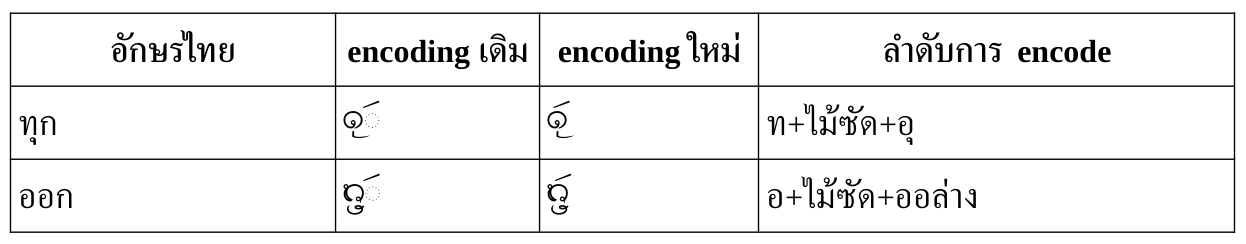

การสะกดแม่กกด้วยไม้ซัด

ไม้ซัดถือว่าเป็นสระบน (Va) ในกฎ ซึ่ง encoding ในร่างฯ สามารถตามหลังสระล่าง (Vb) ได้ แต่ตาม encoding ของ USE ต้องมาก่อนสระล่าง ดังนั้น เมื่อไม้ซัดทำหน้าที่เป็นตัวสะกดแม่กก จึงต้องใช้ลำดับที่ตัวสะกดมาก่อนสระ ไม่ใช่สระมาก่อนตัวสะกด

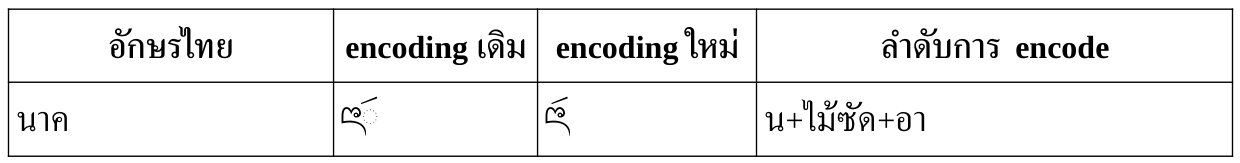

แต่ก็จะมีกรณีที่ไม่สามารถ encode ได้ ไม่ว่าจะตามร่างฯ หรือตาม USE คือเมื่อเป็นตัวสะกดของสระอา

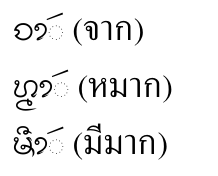

อาจจะยกเว้นคำว่า นาค

ที่สามารถซ่อนลำดับไว้ภายใต้รูปเขียนพิเศษได้

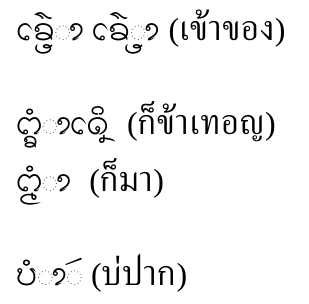

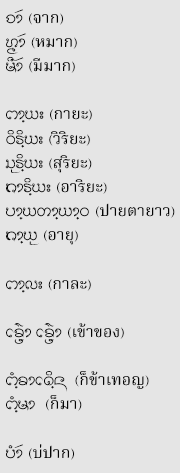

เมื่อมีตัวห้อย/ตัวเฟื้องหรือสระระหว่าง cluster

การเขียนอักษรธรรมในหลายกรณีมีการใช้ตัวห้อย/ตัวเฟื้องเป็นพยัญชนะต้นของพยางค์ถัดไป แทนที่จะใช้ตัวเต็ม ซึ่งในกรณีเหล่านี้ ถ้าวางตัวห้อย/ตัวเฟื้องในตำแหน่งของตัวสะกด ก็จะไม่สามารถวางสระต่อได้อีก เพราะดูเหมือน USE ไม่ได้รองรับ recursion ใน F ตามกฎในร่างฯ และเมื่อแจงต่อไป CC ของพยางค์ถัดไปก็ขาดพยัญชนะต้นตัวเต็ม (C) มาขึ้นต้น CC แต่ถ้า encode โดยเลี่ยงให้ตัวห้อย/ตัวเฟื้องดังกล่าวเป็นส่วนหนึ่งของ CC ของพยางค์ก่อนหน้าก็จะสามารถวางสระต่อได้ แต่ลำดับก็จะดูขัดสามัญสำนึกสักหน่อย

แต่ในบางกรณีก็ไม่เอื้อให้ทำเช่นนั้น เช่น เมื่อมีสระมาคั่นในแบบที่ไม่สามารถสลับลำดับได้

ในคำย่อที่มีการยืมพยัญชนะต้น

บ่อยครั้งที่อักษรธรรมมีการใช้รูปเขียนย่อในลักษณะที่ใช้พยัญชนะต้นตัวเดียวซ้ำในพยางค์มากกว่าหนึ่งพยางค์ ในจำนวนนั้น บางคำอาจสามารถยังจัดลำดับอักขระจนเข้ากับกฎเกณฑ์ของ USE ได้ แม้จะดูเหมือนการเปลี่ยนหน้าที่อักขระ เช่น เปลี่ยนตัวสะกดมาเป็นตัวควบ หรืออาจมีการสลับลำดับสระของพยางค์ต่างๆ

แต่บางกรณีก็ไม่สามารถจัดลำดับให้เข้าเกณฑ์ได้

วิธีหลบเลี่ยง?

อย่างไรก็ดี ปัญหาทั้งหมดนี้สามารถหลบเลี่ยงได้ โดยลบ glyph DOTTED CIRCLE (uni25CC) ออกจากฟอนต์เสีย แล้ว USE ก็จะไม่แสดง dotted circle อีกเลย!

ที่กล่าวมาทั้งหมดนี้คือการทดสอบกับแอปที่ใช้ Harfbuzz แต่สุดท้าย เมื่อนำไปทดสอบกับ MS Word ปรากฏว่ามันก็ยังไม่ยอมง่ายๆ อยู่ดี โดยปัญหาหลักที่พบมีสองข้อ ข้อแรกคือกฎของตัวห้อย/ตัวเฟื้องจะไม่ทำงานถ้าไม่ได้ตามหลังพยัญชนะต้นทันที เช่น เป็นตัวสะกดของสระอา หรือมีสระอื่นมาคั่นกลาง กล่าวคือ

ดูจะถูกเรียกเฉพาะในตำแหน่งพยัญชนะซ้อนกันเท่านั้น ไม่เรียกในตำแหน่งตัวสะกด และอีกข้อหนึ่งคือกฎ ligature สำหรับ blwfนา

ไม่ทำงานเมื่อมีอักขระซ้อนบน/ล่าง

สรุป

ประเด็นต่างๆ ที่พบ พอจะสรุปได้ตังตาราง

| ประเด็น | ใช้ USE | เลี่ยง USE |

|---|---|---|

| วิธีการ |

|

|

| การ encode ข้อความ | กึ่ง visual (ขัดสามัญสำนึกนิดหน่อย) | ตามหลักการสะกดคำ |

| แอปที่รองรับ | Harfbuzz app*, MS Word (บางส่วน) | Harfbuzz app*, Notepad |

| อาการเมื่อไม่รองรับ |

|

|

*Harfbuzz app เช่น LibreOffice, Firefox, Gedit, Mousepad ฯลฯ

จึงพอสรุปได้ว่ายังมีความแตกต่างระหว่างข้อกำหนดในร่างฯ กับสิ่งที่ USE รองรับจริง ทำให้ไม่ว่าจะพยายามอย่างไรก็จะมีข้อบกพร่องเกิดขึ้นเสมอ และแอปที่มีปัญหาเสมอๆ ก็คือ MS Word

หากเลือกใช้ข้อกำหนด USE ทุกแอปที่รองรับ USE ก็จะได้การรองรับแบบ เกือบๆ

ครบถ้วน (โดย MS Word จะมีปัญหามากกว่าเพื่อนสักหน่อย) โดยแลกกับลำดับการ encode ที่ผิดธรรมชาติของผู้ใช้ ซึ่งเป็นการแลกที่ผมคิดว่าไม่คุ้ม เพราะจะมีผลให้เกิดเอกสารที่ encode แปลกๆ เกิดขึ้นในระหว่างที่ USE ยังไม่พร้อม แถมสิ่งที่ได้คืนมาก็ยังไม่ใช่สิ่งที่สมบูรณ์เสียด้วย

ในขณะที่หากเลือกหลีกเลี่ยง USE แอปส่วนใหญ่ยกเว้น MS Word ก็จะสามารถจัดแสดงอักษรธรรมได้สมบูรณ์ (ดังกล่าวไว้ใน blog ที่แล้ว) โดยที่ผู้ใช้ก็ยังคงใช้ encoding แบบเก่าได้เช่นเดิม และเมื่อไรที่ USE พร้อม ก็เพียงแปลง encoding ไปเป็นแบบใหม่ (ซึ่งอาจจะอัตโนมัติหรือ manual ก็ค่อยว่ากัน) โดยไม่ต้องมี encoding ชั่วคราวของ USE มาแทรกกลางเป็นชนิดที่สามให้เกิดความสับสนเพิ่ม โดยแลกกับการทิ้ง MS Word ที่ยังไงก็ไม่สมบูรณ์อยู่แล้ว และแนะนำให้ผู้ใช้อักษรธรรมใช้ LibreOffice หรือ Notepad (ซึ่งเป็นผลพลอยได้) ในการเตรียมเอกสารแทน ส่วนเว็บเบราว์เซอร์นั้นไม่เป็นปัญหา เพราะเบราว์เซอร์ส่วนใหญ่ก็ใช้ Harfbuzz เป็นฐานกันอยู่แล้ว การรองรับก็จะเหมือนๆ กับใน LibreOffice นั่นแล

ด้วยเหตุนี้ ผมจึงเลือกที่จะสร้างฟอนต์อักษรธรรมที่ หลีกเลี่ยง USE ต่อไป จนกว่า USE จะพร้อมจริงๆ

1 April 2025

Thep

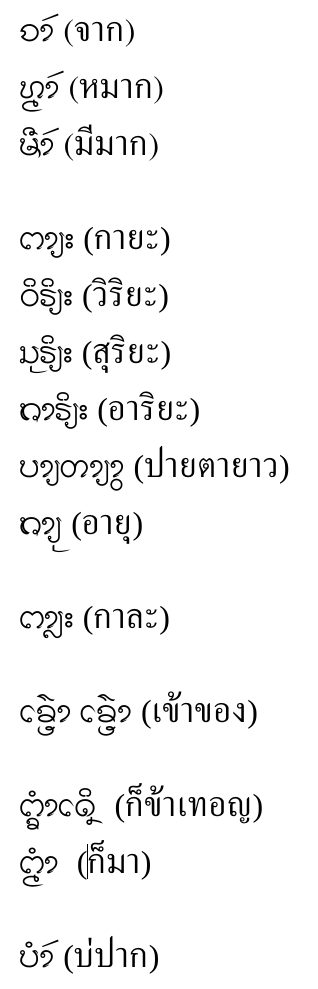

Lao Tham Font vs USE

บันทึกการกลับมาทำฟอนต์อักษรธรรมอีกครั้ง หลังจาก commit ล่าสุด 4 ปีที่แล้ว โดยในช่วงหลังของการทำงานรอบนั้น มีความเปลี่ยนแปลงสำคัญใน Harfbuzz ก่อนที่ผมจะวางมือไปทำอย่างอื่น คือ มีการใช้ Universal Shaping Engine (USE) ตามข้อกำหนดของไมโครซอฟท์ ทำให้กฎบางข้อหยุดทำงาน เช่น การจัดการไม้กั๋งไหล (ลาวเรียก ไม้อังแล่น

) แต่ผมก็ไม่ได้ไปติดตาม

ในเอกสาร Creating and supporting OpenType fonts for the Universal Shaping Engine ของไมโครซอฟท์ ได้อธิบายขั้นตอนต่างๆ ที่ USE จะเรียกใช้กฎในฟอนต์ โดยที่ USE เองก็มีการประมวลผลภายในเองบางส่วนด้วย ตามแต่อักษรที่รองรับ โดยสำหรับอักษรธรรมก็มีเชิงอรรถให้ปวดใจว่า

Note: Tai Tham support is currently limited. Additional encoding work is required for full text representation to be possible.

การรองรับอักษรธรรมยังคงจำกัดอยู่ในตอนนี้ ยังต้องทำงานด้านการกำหนดรหัสกันต่อไปเพื่อจะให้ได้รหัสที่สามารถแทนข้อความได้เต็มที่

ซึ่งเมื่อไปตรวจสอบเอกสารที่เกี่ยวข้อง ก็พบเอกสาร Structuring Tai Tham Unicode (L2/19-365) โดย Martin Hosken ซึ่งเป็นร่างข้อเสนอที่จะปรับลำดับการลงรหัสอักขระอักษรธรรมจาก แบบเริ่มแรก (L2/05-095R) เพื่อการวาดแสดงด้วย USE ซึ่งลำดับแบบใหม่ค่อนข้างแตกต่างจากลำดับการสะกดในใจพอสมควร และข้อเสนอใหม่ก็ยังคงเป็นฉบับร่าง จึงเกิดความไม่แน่นอนว่าแนวทางการลงรหัสปัจจุบันจะเป็นแบบไหน

เนื่องจากพระอาจารย์ชยสิริ ชยสาโร ประสงค์จะให้ผมเพิ่มอักษรธรรมลงในฟอนต์ Laobuhan ของท่าน ผมจึงถือโอกาสทดลองทำตามข้อกำหนดของ USE อันใหม่เสียเลย โดยพยายามทดสอบบน Windows 11 ด้วย นอกเหนือจากที่เคยทดสอบเฉพาะบนลินุกซ์ โดยในข้อกำหนดใหม่ USE มีการ reorder สระหน้าให้ จึงไม่ต้องทำในฟอนต์เองอีกต่อไป สิ่งที่ควรทำ (เรียงตามลำดับการเรียกใช้โดย USE) จึงเป็น:

-

pref(Pre-base forms) เพื่อระบุตำแหน่งของระวง เพื่อที่ USE จะ reorder ไปไว้หน้าพยัญชนะต้นให้ในภายหลัง -

rphf(Reph forms) ความจริงเป็นกฎสำหรับ reorderเรผะ

ของอักษรอินเดีย ที่ดูแล้วน่าจะใช้กับไม้กั๋งไหล

ของอักษรธรรมได้ แต่เมื่อทดลองทำจริงแล้วไม่เป็นผล ซึ่งในเอกสารของไมโครซอฟท์เองก็ระบุไว้ว่า:Note that the category REPHA is not currently supported by USE.

ซึ่งหมายความว่าrphfเองก็จะยังไม่ทำงาน -

blwf(Below-base forms) เพื่อทำตัวห้อย ตัวเฟื้อง -

liga(Standard ligatures) เพื่อทำรูปเขียนพิเศษ เช่นนา

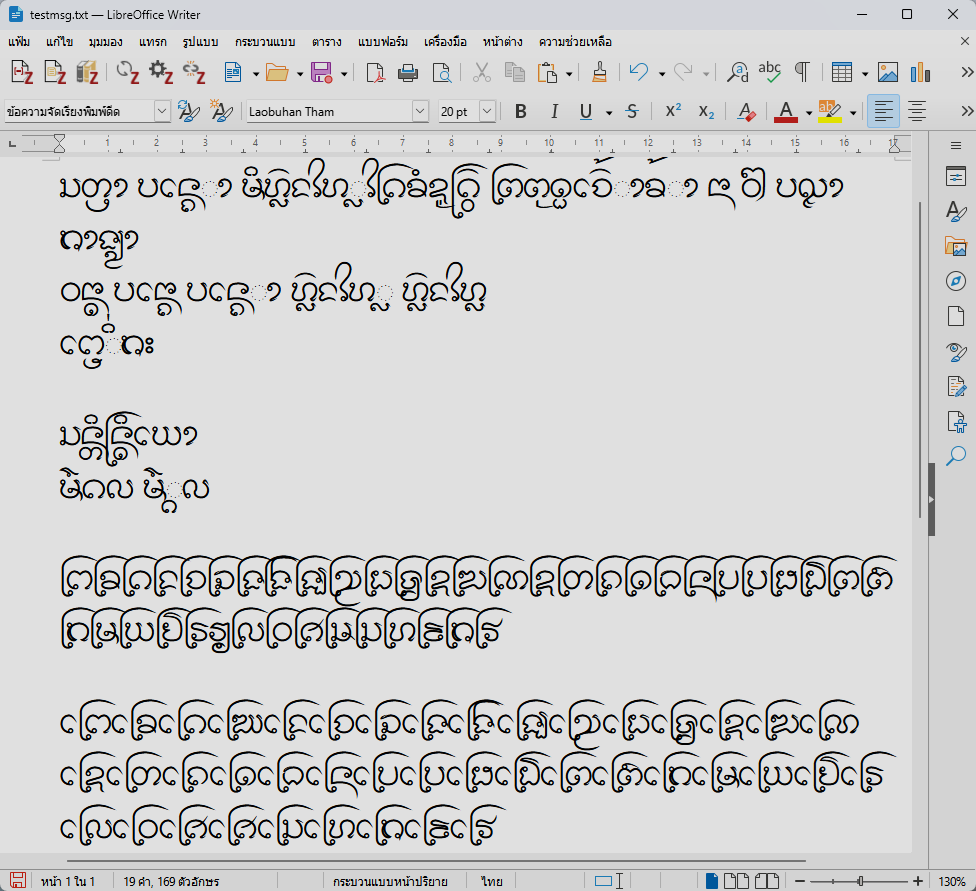

ผลการทดสอบคือ แอปที่ใช้ Harfbuzz (GTK, LibreOffice, Firefox) และ MS Word วาดแสดงอักษรธรรมได้ดี ยกเว้นในบางกรณี เช่น สระอาที่ตามหลังวรรณยุกต์จะมี dotted circle แทรกเข้ามา และตัว ล ห้อย (ที่ไม่ใช้ SAKOT) ที่ใช้ร่วมกับสระหน้าจะแสดงถูกต้องเฉพาะเมื่อลงรหัสด้วยลำดับที่เหมาะสมเท่านั้น กล่าวคือ ถ้าใช้ structure ข้อความแบบใหม่ที่ออกแบบให้รองรับ USE ก็ น่าจะ แสดงได้ถูกต้องเป็นส่วนมาก

อักษรธรรมบน LibreOffice Writer แบบใช้ USE

อักษรธรรมบน LibreOffice Writer แบบใช้ USE

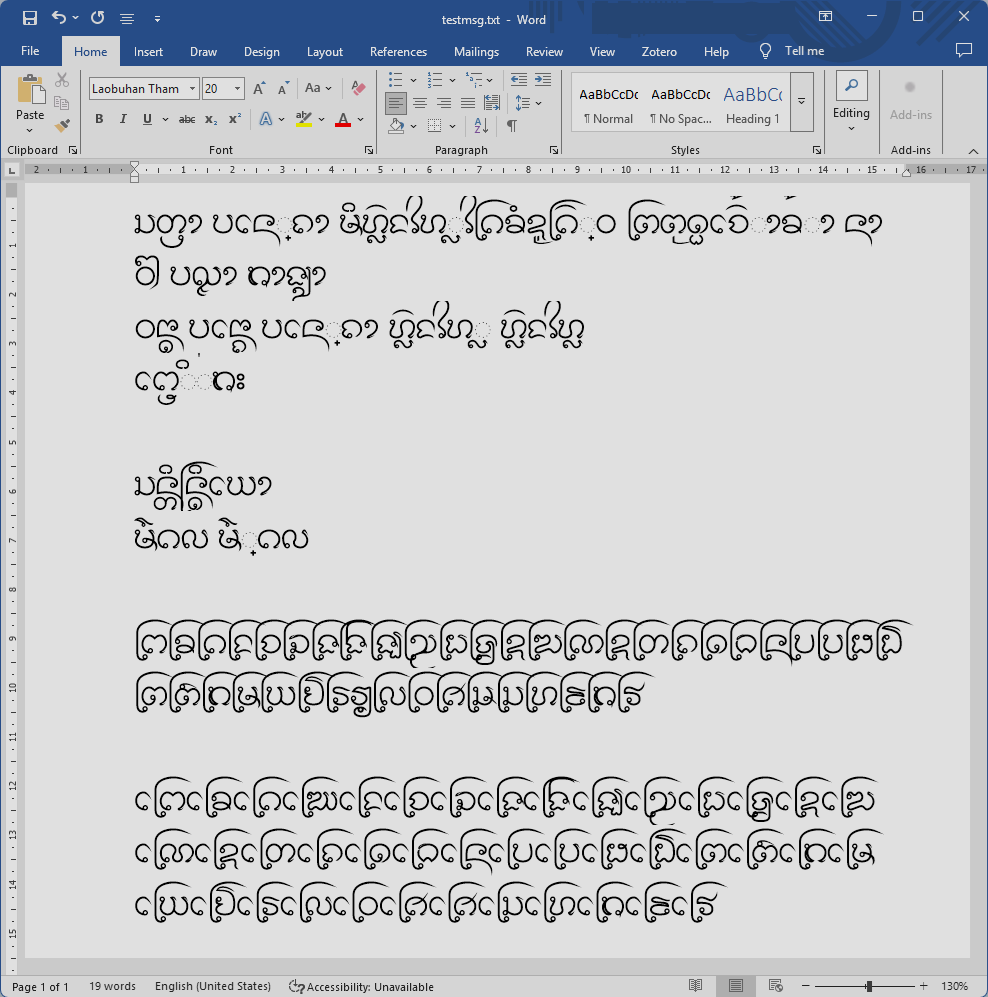

อักษรธรรมบน MS Word แบบใช้ USE

อักษรธรรมบน MS Word แบบใช้ USE

แต่กับ Notepad บน Windows ดูเหมือนกฎต่างๆ จะไม่ทำงานเลย! สถานการณ์อาจต่างจากลินุกซ์ที่แอปแทบทุกตัวใช้ Harfbuzz กันหมด แต่บน Windows ยังแยก implement กันอยู่

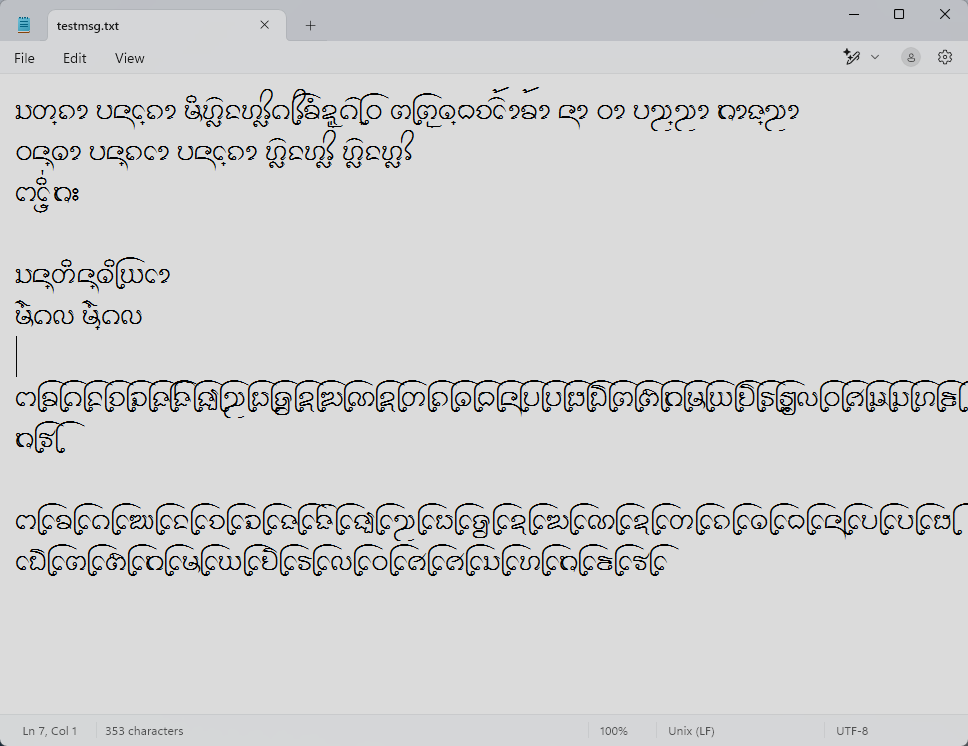

อักษรธรรมบน Windows Notepad แบบใช้ USE

อักษรธรรมบน Windows Notepad แบบใช้ USE

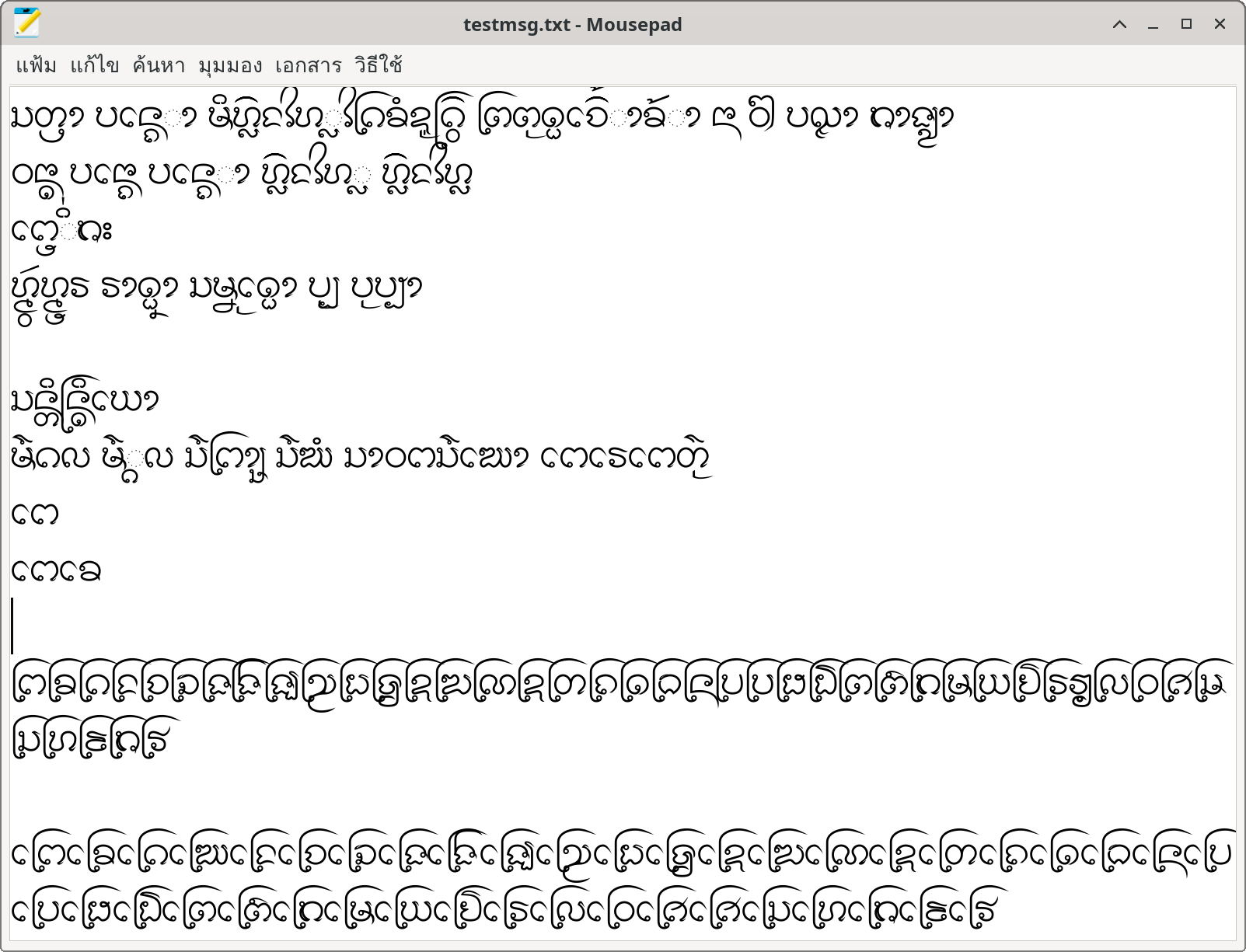

อักษรธรรมบน Linux Mousepad แบบใช้ USE

อักษรธรรมบน Linux Mousepad แบบใช้ USE

แต่ปัญหา dotted circle ในบางกรณีของ USE ก็ยังทำให้ผมต้องค้นหาข้อมูลต่อ ทั้งไล่ซอร์สโค้ดของ Harfbuzz ทั้งค้นเว็บ จนไปเจอ โพสต์ของ Richard Wordingham เล่าปัญหาเดียวกันที่เขาเจอ โดยได้พูดถึงวิธีหลบเลี่ยง USE ด้วย โดยในทุกกฎ GSUB, GPOS ไม่ต้องระบุรหัสอักษรเป็น

เลย เพียงใช้รหัสอักษร lana

ก็พอ ซึ่งถ้าเป็น Harfbuzz ก็จะไปเรียก default engine แทน USE ซึ่งฟอนต์ก็ต้องเตรียมกฎ GSUB ให้มันทำงานทุกอย่างแทน USE ทั้งหมด ผ่าน OpenType feature เท่าที่ใช้รองรับ Standard Scripts ซึ่งก็จะมี feature จำนวนจำกัดให้ใช้ เช่น DFLT

และ ccmp

เป็นต้นliga

เรื่องกฎไม่ใช่เรื่องยาก เพราะฟอนต์ Khottabun ที่ผมสร้างตั้งแต่ก่อนที่ Harfbuzz จะใช้ USE ก็ทำทุกอย่างเองหมดอยู่แล้ว ก็เลยหยิบมาทดลองได้ทันที โดยตัดรหัสอักษร

ออกจากกฎ lana

ที่ใช้ทั้งหมดccmp

ผลปรากฏว่า มันยังคงวาดแสดงได้อย่างสมบูรณ์บน Harfbuzz แต่คราวนี้มันทำงานบน Notepad บน Windows ด้วย! โดยในทั้งสองกรณี กฎที่เขียนไว้สำหรับไม้กั๋งไหลที่เคยไม่ทำงานบน Harfbuzz ก็กลับทำงานเรียบร้อย! (ดูตัวอย่างคำว่า มงฺคล แบบแรก)

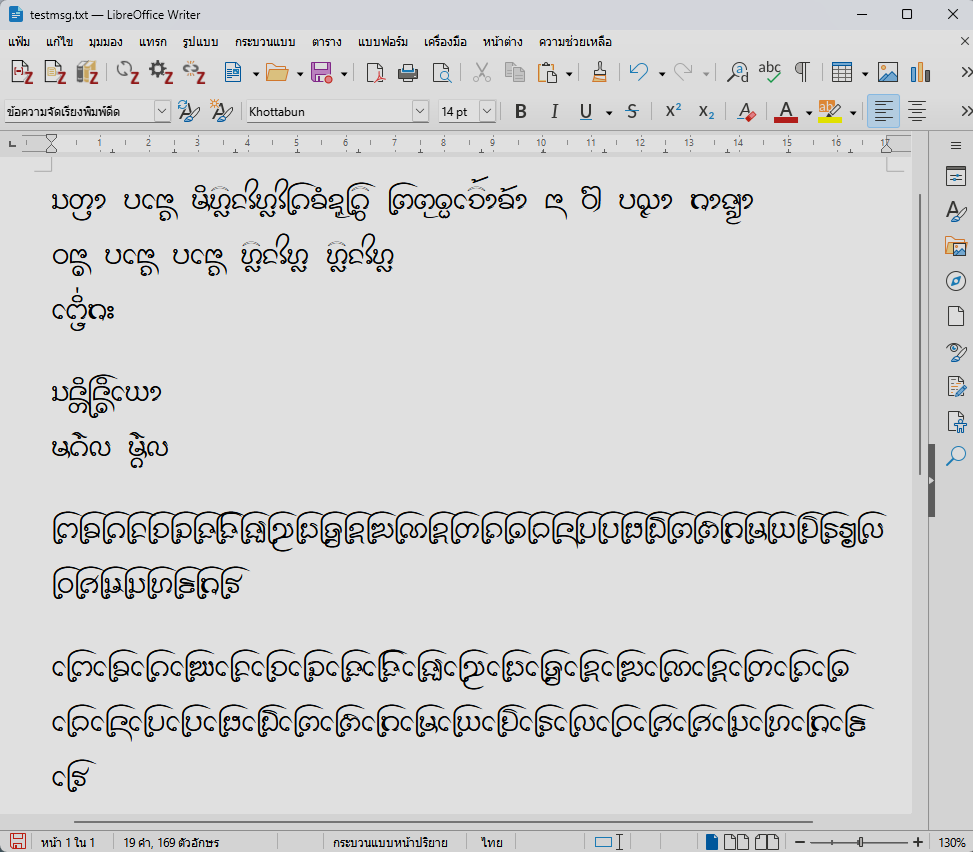

อักษรธรรมบน LibreOffice Writer แบบใช้รหัสอักษร

อักษรธรรมบน LibreOffice Writer แบบใช้รหัสอักษร DFLT อักษรธรรมบน Windows Notepad แบบใช้รหัสอักษร

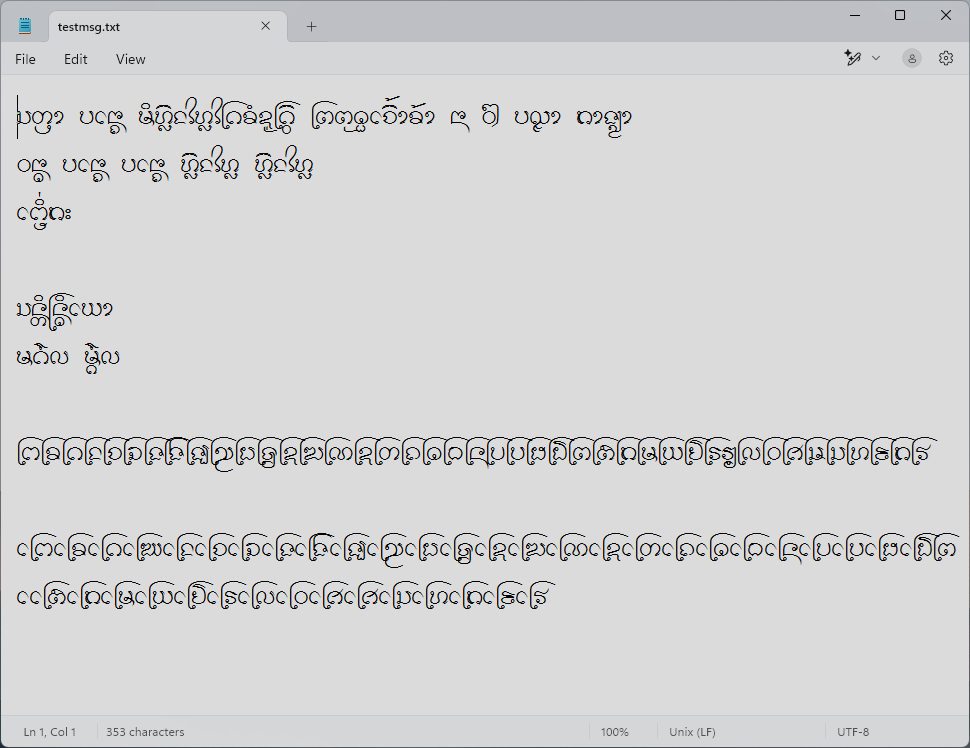

อักษรธรรมบน Windows Notepad แบบใช้รหัสอักษร DFLTแต่ข่าวร้ายคือ มันเละบน MS Word

อักษรธรรมบน MS Word แบบใช้รหัสอักษร

อักษรธรรมบน MS Word แบบใช้รหัสอักษร DFLTซึ่งจากการทดลองก็สรุปได้ว่ามันเกิดจาก:

- ข้อจำกัดของ

บน engine ของ MS Word ที่ดูจะไม่รองรับ contextual substitution ที่ซับซ้อน (เข้าใจว่าทำได้แค่ multiple substitution และ ligature substitution อย่างง่ายเท่านั้น)ccmp - engine ของ MS Word ยังคงใช้ USE เช่นเดิม ทำให้ถึงแม้จะเปลี่ยนไปใช้

ที่สามารถทำ contextual substitution ซับซ้อนได้ ก็จะเกิดการ reorder สระหน้าซ้ำซ้อนกันระหว่างโดยตัว engine เองก้บโดยกฎในฟอนต์จนสระหน้าสามารถเลื่อนข้ามพยัญชนะไปได้ถึง 2 ตำแหน่งliga

ถ้าคิดตามหลักเหตุผลแล้ว ถ้า

ที่ USE เรียกใช้ในขั้น preprocessing สามารถทำงานได้อย่างถูกต้อง โดยให้กฎแทรก glyph ผีสักตัว (เช่น ZWNJ) ไว้หน้าสระหน้าที่สลับลำดับแล้ว เพื่อให้มันคั่นระหว่างสระหน้ากับพยัญชนะที่อยู่ก่อนหน้าไว้ ก็ควรจะสามารถป้องกัน USE ไม่ให้ reorder ซ้ำอีกได้ แต่ในเมื่อ DirectWrite engine ที่ MS Word ใช้ไม่รองรับ ccmp

ที่ซับซ้อน (แบบที่ Harfbuzz ทำ) มันก็จบเห่ตั้งแต่ต้น ส่วน ccmp

หรือ liga

ที่รองรับ contextual substitution แบบซับซ้อนได้ ก็ถูกเรียกทำงานหลังการ reorder ภายในของ USE ไปแล้ว จึงไม่สามารถควบคุมอะไรได้ทันการณ์clig

ในเมื่อ USE ก็ไม่สมบูรณ์ จะเลี่ยง USE ก็ติดปัญหากับ MS Word ผมจึงอยู่บนทางเลือก:

- ใช้ USE ไปเลย แล้วรอให้มีการแก้ปัญหาของ USE อีกที

- เลี่ยง USE ต่อไป โดยได้การทำงานของไม้กั๋งไหลเพิ่มมาด้วย แต่ยอมทิ้งการรองรับบน MS Word

- เลี่ยง USE แบบต้องปรับฟอนต์ขนานใหญ่ให้ใช้

ในการ reorder ให้ได้ ผ่าน ligature glyph แล้วค่อยรื้อกลับใหม่อีกทีเมื่อ USE พร้อมccmp

การเลือกครั้งนี้จะไม่ยากเลยถ้าการรองรับอักษรธรรมใน Unicode มีทิศทางที่ชัดเจน แต่ปัญหาคือมันไม่ขยับมา 6 ปีแล้ว

แอปที่ใช้ Harfbuzz นั้นทำงานได้กับทุกทางเลือก ปัญหาอยู่ที่แอปนอกเหนือจากนั้น โดยในที่นี้คือ MS Word กับ Notepad ซึ่งเราต้องเลือกเอาอย่างใดอย่างหนึ่ง ถ้าใช้ USE ก็จะได้ MS Word ที่ทำงานได้เท่าที่ USE รองรับ ถ้าไม่ใช้ USE ก็จะได้ Notepad ที่ทำงานเต็มรูปแบบ

โดย Notepad เองก็ถือเป็นตัวแทนของแอปอื่นๆ ที่รองรับ OpenType แบบผิวเผินด้วย ในขณะที่ MS Word ก็น่าจะเป็นแอปหลักแอปหนึ่งที่ผู้ใช้ฟอนต์จะใช้เตรียมเอกสาร (แต่จะให้ดี LibreOffice เป็นทางเลือกที่เปิดกว้างต่อ solution ต่างๆ มากกว่า)

ผมจะวิเคราะห์ใน blog หน้าว่าจะพิจารณาชั่งน้ำหนักอย่างไรต่อไป

23 March 2025

Vee

I have been told to avoid linked lists.

I've been told to avoid linked lists because their elements are scattered everywhere, which can be true in some cases. However, I wonder what happens in loops, which I use frequently. I tried to inspect memory addresses of list elements of these two programs run on SBCL.

CL-USER> (setq *a-list* (let ((a nil)) (push 10 a) (push 20 a) (push 30 a) a))

(30 20 10)

CL-USER> (sb-kernel:get-lisp-obj-address *a-list*)

69517814583 (37 bits, #x102F95AB37)

CL-USER> (sb-kernel:get-lisp-obj-address (cdr *a-list*))

69517814567 (37 bits, #x102F95AB27)

CL-USER> (sb-kernel:get-lisp-obj-address (cddr *a-list*))

69517814551 (37 bits, #x102F95AB17)

CL-USER> (sb-kernel:get-lisp-obj-address (cddr *a-list*))

69517814551 (37 bits, #x102F95AB17)

CL-USER> (setq *a-list* (let ((a nil))

(push 10 a)

(push 20 a)

(push 30 a)

a))

(30 20 10)

CL-USER> (sb-kernel:get-lisp-obj-address *a-list*)

69518319943 (37 bits, #x102F9D6147)

CL-USER> (sb-kernel:get-lisp-obj-address (cdr *a-list*))

69518319927 (37 bits, #x102F9D6137)

CL-USER> (sb-kernel:get-lisp-obj-address (cddr *a-list*))

69518319911 (37 bits, #x102F9D6127)

In both programs, the list elements are not scattered. So, if scattered list elements were an issue for these simple cases, you probably used the wrong compiler or memory allocator.

18 March 2025

Kitt

GIMP 3.0 Released

The wait is over. Here we go .. https://www.gimp.org/news/2025/03/16/gimp-3-0-released/ We may need to update the scripts, though.

1 February 2025

Kitt

LLM Safety

เรื่องสมมติที่เกิดขึ้นจริง ในฝั่ง cybersecurity เราเริ่มใช้ AI ในการป้องกันมาพักนึงแล้ว และพบการโจมตีมากขึ้นเรื่อย ๆ รวมถึงเห็นภัยคุกคามใหม่ ๆ ที่เชื่อมโยงกับ AI ด้วยเหมือนกันในทางบวก ฝั่งป้องกัน เราใช้ AI ช่วยในการ summarize logs เชื่อมโยง security events เพื่อ discovery การโจมตี discover สิ่งที่ rule-based ทำไม่ได้ หรือ overload มนุษย์มาก ๆ ในทางลบ เราเห็น web crawlers / spiders ฝั่ง AI วิ่งเก็บข้อมูลหน้าเว็บหนักกว่าเดิม 5 – 10 เท่าจากปกติ ซึ่งมันกิน resources เด้อจ้า .. ทั้ง CPU, mem, egress ที่ต้องประมวลผลตอบสนอบขึ้นหมดเลย … Continue reading LLM Safety

29 January 2025

Kitt

DeepSeek-R1

ลองเอา deepseek-r1:1.5b ตัวเล็กสุด มารันใน notebook ตัวเอง มันเก่งจริงอย่างที่หลายคนอวยยศแฮะ response เร็ว กิน resource น้อย (อจก. ตามไปอ่าน architecture ของ deepseek แล้ว ในทางวิศวกรรม #ของแทร่ อยู่เด้อ) ประเด็นคือออ .. model ขนาด 1-2b parameters หลาย ๆ models นี่ คอมพิวเตอร์ทั่ว ๆ ไป มี memory เหลือสัก 1.5-2 GB ก็รันได้แล้วนะครับ ไม่ต้องมี GPU/NPU ช่วย accelerate ก็รันได้ (เครื่อง อจก. ก็ไม่มี GPU/NPU) ยิ่งถ้ามี AI-accelerated (e.g., CPU+NPU, Copilot+ PC) ที่กำลังทยอยออกมาวางขายผู้ใช้งานทั่วไป การรัน … Continue reading DeepSeek-R1

26 January 2025

Kitt

2025/1 Information on UTC – TAI

“NO leap second will be introduced at the end of June 2025. The difference between Coordinated Universal Time UTC and the International Atomic Time TAI is : from 2017 January 1, 0h UTC, until further notice : UTC-TAI = -37 s” — IERS EOP Bulletin C#69

AWS Thailand Region

มาแล้วก็ใช้สิครับ AWS Asia Pacific (Thailand) Region .. \(^^)/ ref. https://aws.amazon.com/local/thailand/

CVE: rsync

หัวทีม debian mirror แจ้งข่าวเข้า mailling list แต่เช้าว่า “สูเจ้าจงอัปเกรด rsync ASAP” ต้นเรื่องคือ 3.3 >= rsync >= 3.2.7 มี heap overflow และอื่น ๆ lot นี้ พบ และ fix ไปแล้ว ~ 6 CVEs ครับ main distros release fixes แล้วเมื่อไม่กี่ชั่วโมงที่ผ่านมา

หมูแฮม

ออกจากดาวหมา มาอยู่บ้านนี้สิบหกปี..วันนี้ลุงหมูแฮมได้กลับดาวละน้าาาาา

คืนชีพ skuld.kitty.in.th

10:00 31 ธ.ค. 2024 หลังจากที่ได้ instant ใหม่ OS เดิม (Ubuntu 16.04) เป็นเครื่องเปล่า ๆ ที่ไม่มีข้อมูล สิ่งแรกที่พยายามทำคือกู้จาก snapshot เดียวที่มีอยู่ ทำให้ได้ kitty.in.th ที่มีข้อมูลถึงประมาณต้นเดือนมีนาคม 2017 manually install All-in-One WP migration ทำ site backup ได้ไฟล์ขนาด 2 GB ซึ่งใหญ่เกิน จะ recovery ได้ฟรี แต่ก็ยังดีกว่าไม่มีอะไรเลย หลังจาก มี backup .wpress แล้ว dump mysql, tar gz web root เก็บ ย้ายมาเก็บที่ notebook อีกหนึ่งสำเนา พร้อมล้างเครื่องแล้ว .. กด … Continue reading คืนชีพ skuld.kitty.in.th

2024 Password Guidelines

รหัสผ่านไม่จำเป็นต้องมีสัญลักษณ์ผสมก็ได้ มันทำให้จำยาก พิมพ์ผิดง่าย และไม่ได้ช่วยให้ปลอดภัยอย่างที่เคยคิด แต่จะต้องตั้งรหัสผ่านให้ยาว ๆ (ต่ำสุด ๆ คือ 12 ตัวอักษร แนะนำว่า 15 ขึ้นไป) รหัสผ่านตั้งยาว ๆ ได้ ก็จะใช้ยาว ๆ ได้เลย ไม่จำเป็นต้องเปลี่ยนทุก 90 วันเหมือนที่เคยแนะนำกันอีกแล้ว จะใช้นานเท่าไหร่ก็ไม่ว่า จะบังคับเปลี่ยนก็ต่อเมื่อมีหลักฐานเพียงพอว่ารหัสผ่านรั่วไหล อย่าใช้รหัสผ่านเดียวกันหลายเว็บ/แอป มันมีความเสี่ยงที่เว็บ/แอป ทำรหัสผ่านเรารั่วไหล แล้วเอารหัสผ่านนั้นไป login เว็บอื่น ๆ ต่อ สำหรับคนที่กลัวจำรหัสผ่านยาว ๆ ไม่ได้ เรามีเทคโนโลยี password manager ช่วยตั้งและจำรหัสผ่านแทนได้นะครับ จะใช้ Google Chrome, Apple Password ไปเลยก็ได้ หรือจะใช้ LastPass, Dashlane, Bitwarden, 1Password ก็ได้ เดี๋ยวนี้ รหัสผ่านอย่างเดียวไม่พอจริง ๆ … Continue reading 2024 Password Guidelines

Talk is cheap. Show me the code.

About TH Sarabun

เรื่องสมมติที่เกิดขึ้นจริง สิบสองปีก่อนโน้น Google Docs ยังใช้ภาษาไทยไม่ได้ดีเท่าทุกวันนี้ อจก. กับ FOSS dev หยิบมือนึง อยากเอาฟอนต์ TH Sarabun New เข้าไปอยู่ใน Google Docs จะได้สร้างเอกสารภาษาไทยได้สมบูรณ์ขึ้น โดยเฉพาะเอกสารราชการซึ่งมีมติฯ ให้ใช้ TH Sarabun PSK (อ่านต่อไปเรื่อย ๆ จะเข้าใจ PSK กับ New) และบังเอิญหยิบมือนั้นได้คุยกับ Dave Crossland คนที่ทำโครงการ Google Fonts .. เวลานั้น Dave บอก อจก. ว่ากำลังจะมาเมืองไทยพอดี ถ้าจะให้ workshop เรื่องฟอนต์ สัญญาอนุญาต การออกแบบ โปรแกรม Dave ยินดีมาก ๆ สำหรับหยิบมือนั้น การคุยกันกับ Dave เป็นการปักหมุด เรื่องฟอนต์ไทยไป … Continue reading About TH Sarabun

19 January 2025

Vee

JSON Manipulation

There are many ways to manipulate JSON. I reviewed a few rapid ways today, which are using a command line tool called jq and libraries that support JSONPath query language.

jq

Awk is a powerful domain-specific language for text processing, but it lacks built-in support for manipulating hierarchical data structures like trees. jq fills this gap by providing a tool for transforming JSON data. However, one potential drawback is that jq requires a separate installation, which may add complexity to my workflow.

So I write a shell script using jq to extract user's names and user's urls from a cURL response, and then output it in TSV format.

curl -s 'https://mstdn.in.th/api/v1/timelines/public?limit=10' | jq '.[].account | [.username, .url] | @tsv' -r

The result looks like this:

kuketzblog https://social.tchncs.de/@kuketzblog

cats https://social.goose.rodeo/@cats

AlJazeera https://flipboard.com/@AlJazeera

TheHindu https://flipboard.com/@TheHindu

GossiTheDog https://cyberplace.social/@GossiTheDog

kuketzblog https://social.tchncs.de/@kuketzblog

weeklyOSM https://en.osm.town/@weeklyOSM

juanbellas https://masto.es/@juanbellas

noborusudou https://misskey.io/@noborusudou

jerryd https://mastodon.social/@jerryd

With a TSV file, we can use Awk, sed, etc. to manipulate them as usual.

JSONPath

JSONPath, which was explained in RFC 9535, is supported by many libraries and applications, e.g. PostgreSQL. Still, I try to it in Python by the jsonpath_nq library.

from jsonpath_ng import parse

import requests

res = requests.get("https://mstdn.in.th/api/v1/timelines/public?limit=10")

compiled_path = parse("$[*].account")

for matched_node in compiled_path.find(res.json()):

print(matched_node.value["username"] + "\t" + matched_node.value["url"])

It gave the same result to the shell script above. The code is a bit longer than the shell script. However, it can be integrated with many Python libraries.

1 January 2025

Kitt

ป๊า

ต้นเดือน กพ. คุณพ่อ อจก. ป่วย หมอพบลำไส้อุดตัน และภายหลังพบว่าสาเหตุมาจากมะเร็งลำไส้ใหญ่ระยะ 3 หมอ รพ.ขก. ผ่าตัดฉุกเฉินรักษาได้ทัน แต่พ่อติดเชื้อหลังผ่าตัด อจก. ย้ายพ่อมารักษาที่ รพ.ศรีฯ เชื้อดื้อยามาก ๆ ลากยาวมาจนถึงสงกรานต์ ร่างกายพ่ออ่อนแอจนมีการติดเชื้อราเพิ่ม หมอให้ยาฆ่าเชื้อ 5 ตัว ยาตัวสุดท้ายที่เหลือให้หมอใช้ได้ เป็นตัวที่แรงมาก ๆ และมีผลข้างเคียงแรงมาก ๆ แต่ก็ไม่สามารถลดการติดเชื้อได้ เราหมดทางรักษาการติดเชื้อของพ่อ พ่ออดทนกับการรับยาฆ่าเชื้อมาจนถึงเมื่อวาน เวลา 11:30 เป็นเวลาที่เราได้พบกับทีมรักษาประคับประคอง (palliative care) ครอบครัวเราตัดสินใจรับ palliative care ในช่วงท้าย ทำให้ครอบครัวเรามีเวลาอยู่กับท่าน และเลือกการประคับประคองที่ทำได้ สำหรับบางกรณี อาจจะประคองกันได้เป็นเดือน ๆ ทำให้ครอบครัวมีเวลาได้อยู่ด้วยกัน ใช้ชีวิตได้เกือบจะปกติ สำหรับกรณีของเรา ทางเลือกที่พ่อทรมานที่สุดอาจจะประคองได้ไม่กี่ชั่วโมง และทางเลือกที่ทรมานน้อยที่สุดประคองได้ไม่กี่นาที ครอบครัวเราเลือกที่พ่อทรมานน้อยที่สุด หยุดรับการให้ยาฆ่าเชื้อ จนเมื่อวานเย็น เราให้ถอด life support … Continue reading ป๊า

HNY2025

ฉลองด้วยการทำงานตั้งแต่ UTC+11 ยัน UTC+4 ตื่นสาย ๆ ก็นึกขึ้นได้ว่า .. “เรามี archive.org นี่หว่า!!!” เช็ค archive.org ว่ามี snapshot หน้าเว็บนี้ไหม ปรากฎว่ามีเยอะเลย! “รอดแล้วโว้ยยย” สรุปว่า .. โพสหลังมีนาคม 2017 จนถึง 30 ธันวาคม 2024 เอากลับมาโดย copy & paste มาจาก archive.org ล่ะจ้า

Got an operation

/me มีติ่งเนื้อที่ข้อพับหลังเข่าซ้าย เป็นอยู่หลายเดือนแล้ว ขนาดก็ไม่ได้โตขึ้นอะไร เมื่อวานไปผ่าตัดเอาออก ผ่าเล็กห้อง ER ฉีดยาชา ไม่มีราก ขั้วไม่ใหญ่ เฉือนออกไม่มาก แต่กรีดแผลกว้างหน่อย 15 นาทีเสร็จ หมอเห็นติ่งเนื้อเองก็ไม่แน่ใจว่าคืออะไร เลยส่งตรวจ ส่วนตอนนี้พันแผลไว้ ห้ามแกะ ห้ามโดนน้ำ นัดตัดไหมอีกสองสัปดาห์ค่อยแกะทีเดียว

Stitches removed + Lab result

Well, two weeks after the operation, I got stitches removed. The excision wound is somewhere between 4-5 cm long, it’s pretty intact. Still need to put a gauze pad for 4-5 more days though. So, what is it exactly ? Based on diagnostic surgical pathology, it’s an irritated seborrheic keratosis. Safe ? Yes.

5-min ETH Smart Contract

5-minute smart contract on ETH-compat blockchain network ? .. Yes, it is very possible .. if you already have some knowledge about it.

คำนวณวันสงกรานต์ปี 2563

สงกรานต์ปี 2563 เป็นปี จ.ศ. (2563 – 1181) = 1382 วันเถลิงศก ตรงกับ (1382 * 0.25875)+ floor(1382 / 100 + 0.38)– floor(1382/ 4 + 0.5)– floor(1382 / 400 + 0.595)– 5.53375= 357.5925 + 14 – 346 – 4 – 5.53375= 16.05875 = วันที่ 16 เมษายน 2563 เวลา 01:24:36 วันสงกรานต์ ตรงกับ 16.05875 – 2.165 = 13.89375 = วันที่ … Continue reading คำนวณวันสงกรานต์ปี 2563

คำนวณวันสงกรานต์ปี 2564

สงกรานต์ปี 2564 เป็นปี จ.ศ. (2564 – 1181) = 1383 วันเถลิงศก ตรงกับ (1383 * 0.25875)+ floor(1383 / 100 + 0.38)– floor(1383/ 4 + 0.5)– floor(1383 / 400 + 0.595)– 5.53375= 357.85125 + 14 – 346 – 4 – 5.53375= 16.3175 = วันที่ 16 เมษายน 2564 เวลา 07:37:12 วันสงกรานต์ ตรงกับ 16.3175 – 2.165 = 14.1525 = วันที่ … Continue reading คำนวณวันสงกรานต์ปี 2564

Inception ?

I knew I’m in a dream. It was a bad dream. I wanted to get out I knew I’m in a dream. I decided to commit a suicide so it would wake me up. I jumped out of a window, closed my eyes. It was pitch black. Eyes opened, I woke up .. .. in … Continue reading Inception ?

วันสงกรานต์ปี 2565

สงกรานต์ปี 2565 เป็นปี จ.ศ. (2565 – 1181) = 1384 วันเถลิงศก ตรงกับ(1384 * 0.25875)+ floor(1384 / 100 + 0.38)– floor(1384 / 4 + 0.5)– floor(1384 / 400 + 0.595)– 5.53375= 358.11 + 14 – 346 – 4 – 5.53375= 16.57625= วันที่ 16 เมษายน 2565 เวลา 13:49:48 วันสงกรานต์ ตรงกับ16.57625 – 2.165 = 14.41125= วันที่ 14 เมษายน 2565 … Continue reading วันสงกรานต์ปี 2565

สงกรานต์ปี 2566

เป็นปี จ.ศ. (2566 – 1181) = 1385 วันเถลิงศก ตรงกับ(1385 * 0.25875)+ floor(1385 / 100 + 0.38)– floor(1385 / 4 + 0.5)– floor(1385 / 400 + 0.595)– 5.53375= 358.36875 + 14 – 346 – 4 – 5.53375= 16.835= วันที่ 16 เมษายน 2566 เวลา 20:02:24 วันสงกรานต์ ตรงกับ16.835 – 2.165 = 14.67= วันที่ 14 เมษายน 2566 เวลา 16:04:48

วันสงกรานต์ปี 2567

วันสงกรานต์ปี 2567 เป็นปี จ.ศ. (2567 – 1181) = 1386 วันเถลิงศก ตรงกับ(1386 * 0.25875)+ floor(1386 / 100 + 0.38)– floor(1386 / 4 + 0.5)– floor(1386 / 400 + 0.595)– 5.53375= 358.6275 + 14 – 347 – 4 – 5.53375= 16.09375= วันที่ 16 เมษายน 2567 เวลา 02:15:00 วันสงกรานต์ ตรงกับ16.09375 – 2.165 = 13.92875= วันที่ 13 เมษายน 2567 … Continue reading วันสงกรานต์ปี 2567

31 December 2024

Vee

Four Reasons that I Sometimes Use Awk Instead of Python

Python is a fantastic language, but in specific situations, Awk can offer significant advantages, particularly in terms of portability, longevity, conciseness, and interoperability.

While Python scripts are generally portable, they may not always run seamlessly on popular Docker base images like Debian and Alpine. In contrast, Awk scripts are often readily available and executable within these environments.

Although Python syntax is relatively stable, its lifespan is shorter compared to Awk. For example, the print 10 syntax from the early 2000s is no longer valid in modern Python. However, Awk scripts from the 1980s can still be executed in current environments.

Python is known for its conciseness, especially when compared to languages like Java. However, when it comes to text processing and working within shell pipelines, Awk often provides more concise solutions. For instance, extracting text blocks between "REPORT" and "END" can be achieved with a single line in Awk: /REPORT/,/END/ { print }. Achieving the same result in Python typically involves more lines of code, including handling file input and pattern matching.

While Python can be embedded within shell scripts like Bash, aligning the indentation of multiline Python code with the surrounding shell script can often break the Python syntax. Awk, on the other hand, is less sensitive to indentation, making it easier to integrate into shell scripts.

Although different Awk implementations (such as Busybox Awk and GNU Awk) may have minor variations, Awk generally offers advantages over Python in the situations mentioned above.